Physics of the Senses Series: Auditory Perception or Hearing

- Camille Rebouillat-Sarti

- Sep 18, 2022

- 10 min read

Updated: Sep 14, 2024

Foreword

Human beings are provided with a variety of senses that help them navigate the world around them, including five basic ones: sight, hearing, touch, smell, and taste. The organs associated with each of the latter report on certain sensations to the brain, which then translates them into understandable information, via a complex, yet fascinating process. Bright colours, a loud thud, an acute pain, a familiar flavour on the tongue or a sweet smell that tickles the nose – all these stimuli are put together into one big picture for us to identify our surroundings. However, while these systems are remarkably sophisticated ones in humans, some animals have super sensors. Felines are well-known for their nocturnal vision, elephants have the most powerful nose of the animal world, while bats rely on sound waves to hunt. Magnetoreception – the ability to detect the Earth’s magnetic field, is even considered a sixth sense that birds, along with certain mammals, reptiles, and fish, are gifted with.

The Physics of the Senses Series offers to explore the physical processes that make up each sense, including the so-called sixth sense, and to explain the extent to which they grant certain species 'super-capacities'.

2. Physics of the Senses Series Auditory Perception or Hearing

All senses provide substantial information which allow for interactions that are as meaningful as they are crucial to survival for a tremendous amount of species. Auditory perception, or hearing, while often overlooked in the highly visual contemporary world, comes nonetheless second in the ‘hierarchy of senses’, as demonstrated by an investigation of the University of Regensburg, Germany (Hutmatcher, 2019). The latter showed that only 18% of the participants to the study’s survey indeed declared being most scared of losing this sensorial modality, whereas 75% of them voted for sight (Hutmacher, 2019, p.3). While the reasons behind such a disparity in treatment are debated, Hutmacher explains that vision being ‘more accurate and reliable than the other senses’ is one of the textbook explanations (p.3). The Colavita effect - the fact that 'when a visual and an auditory stimulus are presented simultaneously, participants show a strong tendency to respond to the visual stimulus' further reinforces this idea (Hutmacher p.3). 'Even more, participants frequently report not having perceived the auditory stimulus at all'. (Hutmacher, pp.3-4)

Another theory stems from the ‘Gutenberg Revolution’, also known as the invention of the printing press, which brought about ‘the shift from an oral, hearing-dominated to a written, sight-dominated culture’ (Hutmacher, p.4). Nevertheless, auditory perception, defined as the ‘ability to perceive sound by detecting vibrations’ (Bendong, 2015, p.2), remains an important form of communication, forming the basis of speech, music and hazard perception (Plack, 2018). ‘The study for sounds has also been developed into an important branch of physics – acoustics’ (Bendong, 2015, p.2), and the process of translation of mechanical waves into the sensations related to sounds, involves multiple physiological components. However, as Hutmacher’s investigation demonstrated (2009, p.1), the studies on this modality are three to four times less numerous than those on sight. This article will therefore start by describing the functioning of the ear – the organ in charge of receiving auditory stimuli, then explore the abilities, such as echolocation or earless hearing, that bats, worms and even some humans are provided with.

To start with, the ‘design of the human ear is one of nature’s engineering marvels’ (Sundar et al., 2021). It is divided into three sections: the outer, middle, and inner ear, each participating in transforming sound energy into impulses to be interpreted by the brain. Sound originates from the back-and forth motion of molecules of air in the audible frequency band, i.e., 20 to 20 000 Hz. Although they are inaudible, the infrasonic vibrations (below 20 Hz) are detected through the skull, jawbone and skin, and participate in shaping the experience of music: ‘[t]he physical properties of these vibrations translate to the pitch and loudness of the sound when perceived by a human ear and other parts of the head’ (Sundar et al., 2021, p.1). Just like the eye, the ear is therefore a teleceptor (Sybesma, 1989), an organ capable of responding to distant stimuli, chiefly transmitted through air (Sundar et al., 2021). Furthermore, hearing has one advantage compared to sight: sound waves propagate around objects while light gets reflected by them, granting humans the capacity to hear a source that is not in their sight (Sundar et al., 2021).

As Dr. Sundar outlines, the 'human ear has many modules, such as the pinna, auditory canal, eardrum, ossicles, eustachian tube, cochlea, semicircular canals, cochlear nerve, and vestibular nerve, each containing multiple subparts' (Sundar et al., 2021, p.1). As sound waves enter the outer ear, they travel through the auditory canal – a narrow passageway which leads to the eardrum. The latter vibrates in turn, passing the signal on to three tiny, delicate bones in the middle ear: the malleus, incus, and stapes, more commonly referred to as the hammer, anvil, and stirrup. These bones amplify the sound vibrations, and transmit them to the inner ear, which houses the snail-shaped transducer called cochlea ‘that converts fluid motion to action potentials’ (Sundar et al., p.2). The cochlear fluid ripples under the acoustic stimulation, generating a traveling wave along the basilar membrane, which splits the cochlea into an upper and lower part (Areias et al., 2021). The inner part of the ear also contains the balance mechanism and the auditory nerve. ‘As of now’, Sundar explains, ‘most of the components of the ear are understood, though not completely’ (Sundar et al., p.2).

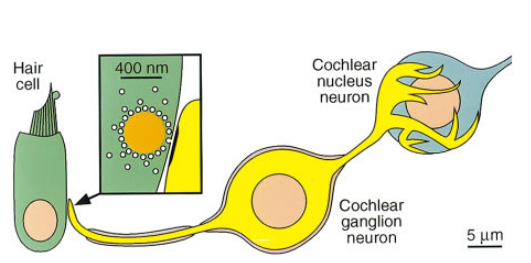

Besides, the ‘linchpin of the auditory system is the hair cell’ (Hudspeth, 1997, p.947), a sensory cell on top of the basilar membrane, that ‘rides’ the travelling wave. As the hair cells move up and down, actin-based protrusions known as stereocilia bend, causing channels at their tips to open and release a chemical rush into the cells. They therefore generate mechanoelectrical transduction – the conversion process of ‘physical force from sound, head movement and gravity into an electric signal’ (McGrath et al., 2017). This mechanism ‘affords remarkable sensitivity through the lack of a threshold step and provides outstanding temporal resolution through the absence of slow chemical processes’ (Hudspeth, 1997, pp.947-8). The electrochemical impulses are then carried to the brain’s cerebral cortex via the eighth cranial nerve – the auditory nerve. Sound is processed in one of the cortex’s four major lobes – the temporal lobe, situated ‘in the middle cranial fossa, a space located close to the skull base’ (Patel et al., 2021, p.1), and interpreted in the form of the familiar sense of hearing.

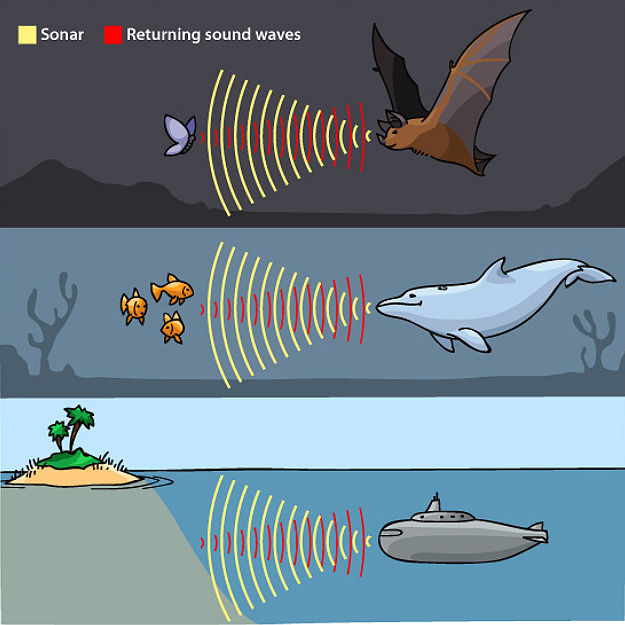

The human ears are most sensitive to frequencies ranging 1500–4000 Hz, 'which covers normal speech’ (Sundar et al., p.1). However, the audible range varies greatly even among mammals. Dogs, for instance, can hear ultrasounds up to 45 kilohertz, cats up to 64 kilohertz, while elephants produce infrasound noises from 1 to 20 hertz (Warfield, 1973). The greater wax moth has even been crowned ‘best ears’ in the animal kingdom for sensing frequencies of up to 300 kilohertz, fifteen times greater than the human limit (Moir et al., 2013). Such acute hearing is essential to survival in wild habitats. In fact, certain species have evolved sophisticated methods to navigate their surroundings relying on their auditory perception. Nature’s very own sonar system, echolocation, is a technique common to hundreds of species, including dolphins, oilbirds, and most famously, bats. This phenomenon, also known as biosonar, takes place when animals emit high-frequency sound pulses and listen to their echo on neighbouring objects. This echo carries information about the object’s size, shape and distance, which, compared to the outgoing pulse, allows to produce images of the surroundings, and therefore distinguish between obstacles and preys (Jones, 2005). Because high frequencies have short wavelengths, they ‘reflect strongly from very small targets such as insects’ (Jones, 2005, p.485). Some remarkable acoustic features observed in bats include ‘doppler shift compensation, whispering echolocation and nasal emission of sound’ (Jones and Teeling, 2006, p.1). Moreover, ‘the echolocation signals produced by bats may exceed 130 decibels in intensity at 10 centimetres from the bats’ mouths, making them some of the most intense airborne animal signals yet recorded’ (Jones, 2005, p.485).

Besides, a current, 'vibrant area of research in psychology and the neurosciences' (Thaler and Goodale, 2016) is human echolocation. It has indeed now been established that ‘many blind individuals produce sound emissions and use the returning echoes […] in a similar manner to bats navigating in the dark’ (Kolarik et al., 2014, p.3) - a faculty that normally-sighted people can also develop. The visual regions of the blind echolocators’ brains activate when they listen to their own echoes in an incredible case of neuroplasticity, pointing towards echolocation being a complete sensory replacement for sight in visually impaired individuals (Thaler et al., 2011). This process is arduous, though, since ‘many aspects of echolocation are not yet understood, and the reasons for individual differences in echolocation ability have not been determined’ (Kolarik et al., 2014. p.23).

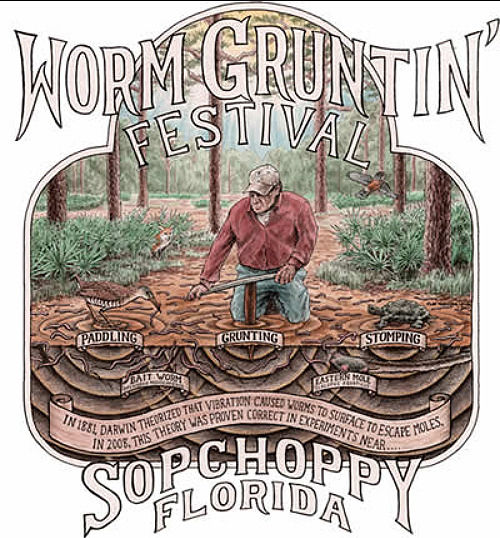

One of nature’s quirks can be observed in the earthworms’ aptitude to sense the vibrations of neighbouring animals despite the former being earless. A study has indeed recently shown that nematodes C. elegans don’t merely perceive the vibrations on the ground surface through the sense of touch – as was previously believed – but they respond to airborne sound, due to what researchers believe to be a whole-body cochlea. The worms’ auditory sensory neurons are indeed closely connected to their skin, which vibrates under sound wave stimulation, causing the fluid inside the worm to ripple as well, in the same fashion as the cochlear fluid (Iliff et al., 2021). This skill lies behind the peculiar technique called ‘worm grunting’ or worm charming, as Catania (2008) explains: ‘[i]n a number of parts of the southeastern United States, families having handed down traditional knowledge for collecting earthworms by vibrating the ground’ (p.1). As man-made vibrations are communicated to the soil through hand tools or power equipment, hundreds, if not thousands of earthworks seem to magically rise up from the ground, exiting their burrows to be collected for fishing.

An entire industry of worm charming even developed in the 1960s and 1970s in Florida, ‘with thousands of people grunting for worms for supplemental income or as the major means of supporting their families’ (Catania, 2008, p. 2). The study demonstrated that the earthworm’s strong reaction to such soil vibrations stems from the fact that humans unknowingly mimic one of their predators – the moles, triggering an escape response. Further, ‘both wood turtles and herring gulls vibrate the ground to elicit earthworm escapes, indicating that a range of predators may exploit the predator-prey relationship between earthworms and moles’ (Catania, 2008, p.2). Humans, on their end, managed to play the role of ‘rare enemy’, resulting in the activation of the hard-wired escape circuitry of the invertebrates, thus ‘exploiting the consequences of a sensory arms race’ (Catania, 2008, p.1).

To summarise, the biophysics of the ear are very complex, as the response to auditory stimuli depends upon the collaboration of various components, ranging from the pinna, the ear canal, the three ossicles, the cochlea – seat of the mechanoelectrical transduction through hair cells, to the auditory nerve. The brain reconstructs the signal into the familiar sensation of sounds, which, for a lot of animals and a few humans, also results in the detailed mapping of their environment through the remarkable process of echolocation. Species such as earthworms have even developed hearing without ears, using their skin as their prime teleceptor. Hearing is therefore a fascinating sense, which remains understudied in comparison to sight, whether it is in the fields of physics, biology or even psychology (Plack, 2018, p.1). Further research, however, could yield incredible results, notably in brain plasticity, human echolocation or hair cell regeneration with regards to hearing loss, also called tinnitus, for which there is currently no cure (McFerran et al., 2019).

Bibliographical References

Areias, B., Parente, M., Gentil, F., & Jorge, R. (2021). Influence of the basilar membrane shape and mechanical properties in the cochlear response: A numerical study. Proceedings Of The Institution Of Mechanical Engineers, Part H: Journal Of Engineering In Medicine, 235 (7), 743-750. https://doi.org/10.1177/09544119211003443.

Bendong, L. (2015). The Science of Sense. Department Of Mathematics, Tongji University, Shanghai 200092, China. https://doi.org/DOI: 10.13140/RG.2.1.3053.2004.

Catania, K. (2008). Worm Grunting, Fiddling, and Charming—Humans Unknowingly Mimic a Predator to Harvest Bait. Plos ONE, 3 (10). https://doi.org/10.1371/journal.pone.0003472.

Hudspeth, A. (1997). How Hearing Happens. Neuron, 19 (5), 947-950. https://doi.org/10.1016/s0896-6273(00)80385-2.

Hutmacher, F. (2019). Why Is There So Much More Research on Vision Than on Any Other Sensory Modality?. Frontiers In Psychology, 10. https://doi.org/10.3389/fpsyg.2019.02246.

Iliff, A., Wang, C., Ronan, E., Hake, A., Guo, Y., & Li, X. et al. (2021). The nematode C. elegans senses airborne sound. Neuron, 109 (22). https://doi.org/10.1016/j.neuron.2021.08.035.

Jones, G. (2005). Echolocation. Current Biology, 15 (13), R484-R488. https://doi.org/10.1016/j.cub.2005.06.051.

Jones, G., & Teeling, E. (2006). The evolution of echolocation in bats. Trends In Ecology &Amp; Evolution, 21 (3), 149-156. https://doi.org/10.1016/j.tree.2006.01.001.

Kolarik, A., Cirstea, S., Pardhan, S., & Moore, B. (2014). A summary of research investigating echolocation abilities of blind and sighted humans. Hearing Research, 310, 1-36. https://doi.org/10.1016/j.heares.2014.01.010.

McFerran, D., Stockdale, D., Holme, R., Large, C., & Baguley, D. (2019). Why Is There No Cure for Tinnitus?. Frontiers In Neuroscience, 13. https://doi.org/10.3389/fnins.2019.00802.

McGrath, J., Roy, P., & Perrin, B. (2017). Stereocilia morphogenesis and maintenance through regulation of actin stability. Seminars In Cell &Amp; Developmental Biology, 65, 88-95. https://doi.org/10.1016/j.semcdb.2016.08.017.

Moir, H., Jackson, J., & Windmill, J. (2013). Extremely high frequency sensitivity in a ‘simple’ ear. Biology Letters, 9 (4). https://doi.org/10.1098/rsbl.2013.0241.

Patel, A., Biso, G., & Fowler, J. B. (2021). Neuroanatomy, Temporal Lobe. In StatPearls. StatPearls Publishing. https://pubmed.ncbi.nlm.nih.gov/30137797/.

Plack, C. (2018). The Sense of Hearing. Routledge, 3, 1-16.

Sundar, P., Chowdhury, C., & Kamarthi, S. (2021). Evaluation of Human Ear Anatomy and Functionality by Axiomatic Design. Biomimetics, 6 (2), 31. https://doi.org/10.3390/biomimetics6020031.

Sybesma, C. (1989). Biophysics of the sensory systems. Biophysics, 255-279. https://doi.org/10.1007/978-94-009-2239-6_11.

Thaler, L., Arnott, S., & Goodale, M. (2011). Neural Correlates of Natural Human Echolocation in Early and Late Blind Echolocation Experts. Plos ONE, 6 (5). https://doi.org/10.1371/journal.pone.0020162.

Thaler, L., & Goodale, M. (2016). Echolocation in humans: an overview. Wires Cognitive Science, 7(6), 382-393. https://doi.org/10.1002/wcs.1408.

Warfield, D. (1973). The study of hearing in animals. W Gay, ed., Methods of Animal Experimentation, 4. Academic Press, London, 43-143.

Visual Sources

Figure 1: Bendt, J. (2018). Hearing [Image]. Retrieved from: https://www.scientificamerican.com/article/hearing-aids-are-finally-entering-the-21st-century/.

Figure 2: Ethelberg Thimm, S. (2017). Sound [Image]. Retrieved from: https://solveigthimm.dk/sound-illustration-friday-submission/.

Figure 3: NIDCD. (2015). Anatomy of the Ear [Image]. Retrieved from: https://www.nidcd.nih.gov/health/how-do-we-hear.

Figure 4: Hudspeth, A. (1997). Synaptic Specializations in the Auditory System [Image]. Retrieved from: https://doi.org/10.1016/S0896-6273(00)80385-2.

Figure 5: Éduscol. (2016). L’écholocalisation [Image]. Retrieved from: https://askabiologist.asu.edu/%C3%A9cholocation-0.

Figure 6: Sopchoppy. (2017). Worm Grunting Festival, Sopchoppy Festival [Image]. Retrieved from: https://backroadplanet.com/sopchoppy-worm-grunting-festival/.

Great detailed analysis. I really appreciated the images chosen, as they complete the article and give a visual representation of some in-text concepts. Good job.

A great insight into the auditory systems conducted with proficiency and an accurate analysis.